I wanted a small compact digicam which is better at taking pictures in bad light than my ixus30, has full manual controls, a wider angle lens, … well no such camera exists currently. So i decided to try the F50fd, it has aperture and shutter priority modes, can take images at up to ISO 6400 and has a image stabilizer.

Size and weight: Well its a bit bigger and heavier than my ixus30 but it still is small enough to fit in my pocket

The image stabilizer: Well, what can i say, i took many images at the telezoom side at 1/5sec shutter with it in continous mode, with it in shot only mode and with it disabled, no single image was useable. Repeating the test at the wide angle side of the zoom at 1/5sec some images where ok others where not, the stabilizer again didnt make much of a difference, sadly ive lost patience and didnt take more than about 5 images each with stabi in mode 1 in mode 2 and without stabi so i dont have statistically significant data for that part. What i can say though is that the stabi did not help me take a single useable image at a shutter speed at which i wouldnt be able to take a good one with a few tries without the stabi

The manual control: Well it does work, and even quite well, you can change the aperture with a single button press, the exposure correcton as well can be changed with a single button press (after you switched the buttons into the right mode which needs 1 button press). Very sadly to change the ISO you have to press at least 3 buttons (4 after the camera is turned on). On the ixus30 you need 1 button press to cange ISO and exposure correction if you are in the correct menu (yes you can take images without leaving the menu on the ixus this doesnt work with the f50 ISO menu), you need 2 button presses to change between the ISO and exposure menues and 2 to reach the exposure and 4 for the ISO menu after power on on the ixus, so in summary the manual control could be made available more directly on both cameras.

Arbitrary limitations: There where a few surprises for me as i was playing around with the camera, first the aperture priority mode is limited to a shutter of 1/4sec at the long side, the shutter priority mode though can be used with up to 1sec. Longer exposures (up to 8 sec) are possible but only at ISO 100

Deleting images: I was mildly annoyed that i had to press 3 buttons to delete an image, but what was much more annoying was that the deletion comes with a nice animaton which you cant disable and which you have to let finish before you can do anything else. That is, its not hard to press the 3 button sequence a few times while the animation plays sadly it has no effect

Auto focus: This one does work better than on the ixus in low light with the focus assist lamp disabled

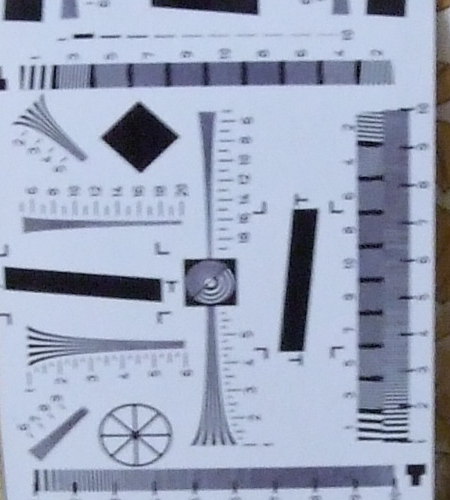

High ISO image quality: Well i hoped that the F50 would be a lot better at taking images at high ISO, sadly the difference is not that large, but see yourself:

F50 ISO3200 1/4sec f/2.8 Now how does one compare this to the IXUS30 which just has ISO400 well one (mis)uses the exposure correction to get the exposure one wants and then fixes the brightness/contrast in software

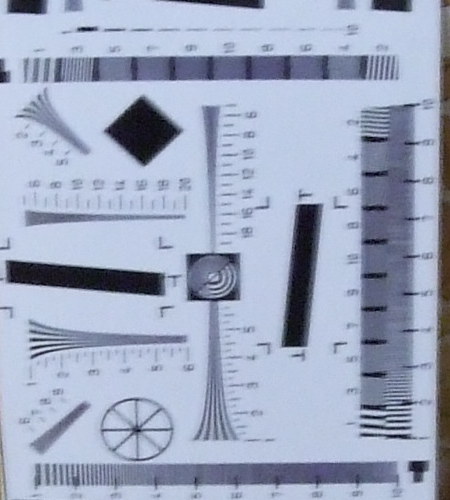

IXUS30 ISO400 -2EV 1/5sec f/2.8 (way too dark yes)

after -vf ow=7:8:16 and gimp to fix levels So which looks better? teh F50 one of course, lets compare it to a longer exposure of the IXUS

ISO400 -1EV 1/2sec f/2.8

fixed up levels in gimp

and with -vf ow=7:8:16 With the 2x longer exposure i would say the IXUS is at least as good as the F50 (with the shorter exposure). And there would be thumbnail images if wordpress would generate them or i knew how to upload several images at once instead of each one individually …

ISO values: well the more i played with the F50 the more i noticed that its ISO values dont match the IXUS ones, that is the IXUS30 at ISO400 seems to match approximately ISO600 on the F50 (matching here means same shutter, aperture and equally bright final images)

Colors: well the F50 is about as (in)accurate as the IXUS30 though they are different

Will i keep the F50? Probably not the improvement is too small over the IXUS30 also i dont like the heavy noise reduction the camera does, it becomes noticeable already at ISO400, the IXUS30 doesnt do this though more recent IXUS versions seem to also follow the trend of butchering images with noise reduction

Ill upload more pictures tommorow …