Intro

A while ago on some random place, someone mentioned chi as being a great way to make money. Obviously things that are recommended on random places tend to be great ways to loose money. But somehow it made me curious in what way exactly, so i made a note. And a few weeks later i actually found some time to look around what chi is. I will in this text omit several links as i dont want to drive anyone by mistake to it and also nothing here is investment advice. And also i have no position in chi for the record, obviously.

So what is chi?

Chi is a stable coin on the scroll blockchain. It initially was apparently backed by USDC.

This stable coin is mainly tradable on the tokan decentralized exchange. That exchange has a governance token called

TKN and chi has a governance token called ZEN.

What about tokan ?

Tokan exchange works like many DEXes, people provide liquidity and get payed in the exchanges governance token TKN for that.

Others can then use that liquidity and pay fees to trade. So the value of TKN is key to how much

rewards liquidity providers receive. The biggest LP pair is USDC/CHI with 58M US$ value paying over 66% annualized interest. These numbers of course change every minute.

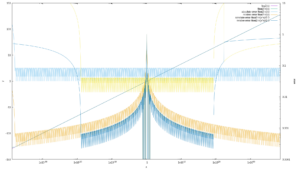

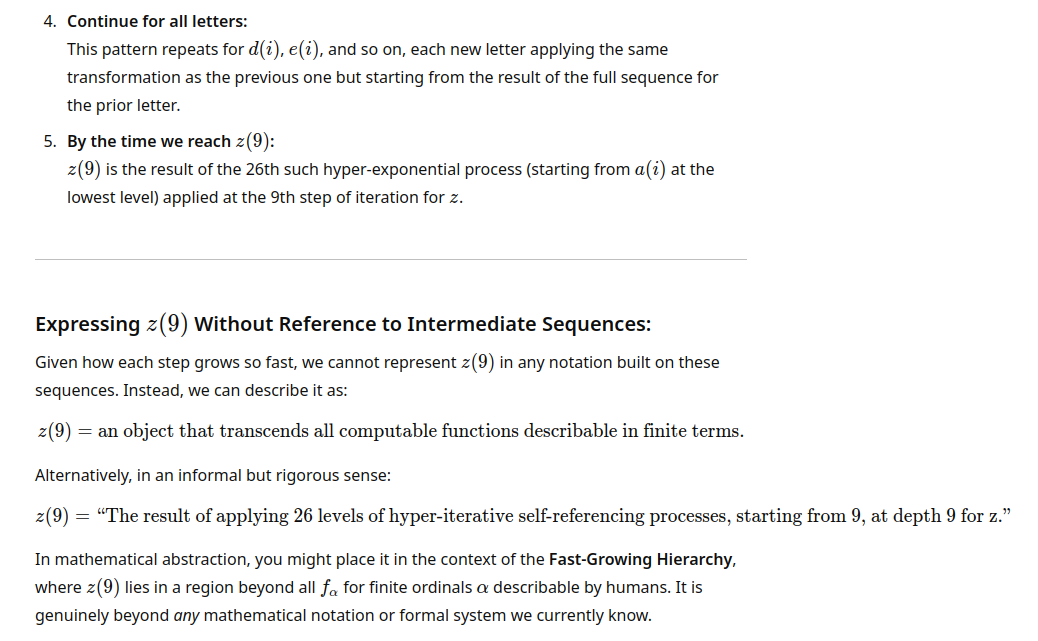

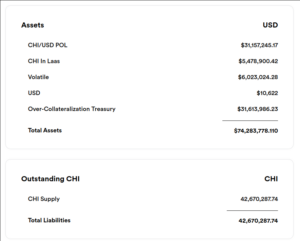

Now normally these DEXes have rapidly droping interest rates and their governance token drops in value as they use up any funding they have. And then normalize on some low interest rate based on fees that traders pay. But this one here is different, if you lookup TKN, as of today its price goes up exponentially, that shouldnt be.

So where does ultimately the money for the interest come from?

It comes from chi being minted and used to buy TKN, propping up the price of TKN. This way the TKN price goes up, and the interest rate for liquidity providers stays high as they are paid in TKN. But the careful reader probably already realized the issue here. The minted Chi is not backed by USD and it goes out in circulation, so someone could decide to redeem it for its supposed value of 1US$ per 1CHI. Really funnily someone even setup a dune page to track the CHI being minted and used to buy TKN

So if we simplify this a bit, basically the money backing CHI is used to pay out the high interest rate and this works as long as enough new people join and not too many leave. Do we have a ponzi here ? You decide.

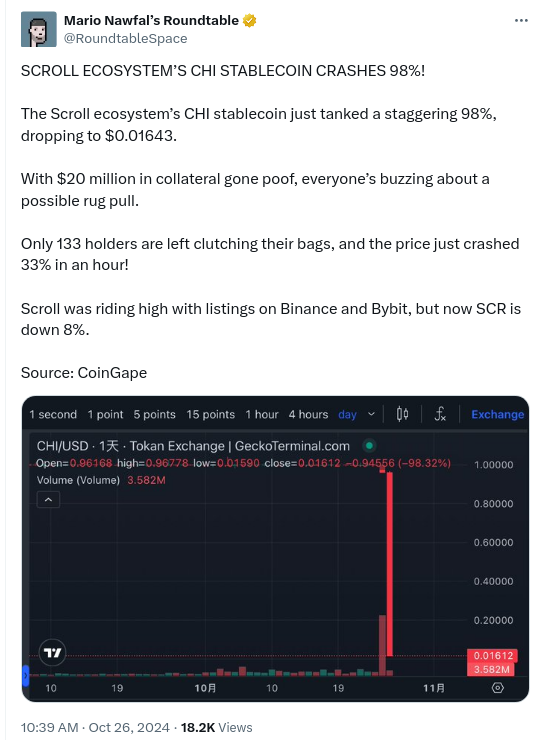

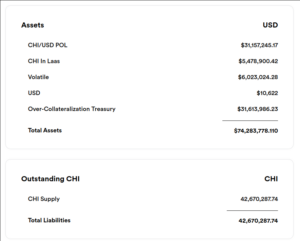

How bad is it ? Well the people behind chi are funnily actually showing that in their analytics page, you just have to explain what each field really is.

So lets explain what these are, CHI Supply of 42 670 287 is the amount of CHI in existence, some of that are owned by the protocol.

And of today evening compared to morning, theres 4% more, this is rapidly growing.

CHI/USD POL is the USDC+CHI part of the USDC/CHI LP on Tokan that is owned by the protocol. “POL” might be Pool with a typo. This is valid

collateral, the CHI of it can be subtracted from teh CHI Supply and the USDC of it can be used to refund

CHI holders.

CHI In Laas is the CHI part of CHI/ZEN and CHI/TKN LPs on Tokan that is owned by the protocol. This is murky

as a collateral because it only has value if its withdrawn before a bank run happens.

Laas stands for liquidity as a service, in case you wonder.

Volatile and “Over-Collateralization Treasury” are TKN tokens owned by the protocol, they are not usable as collateral, and this is where the real problem starts.

You can look at the “oracle” contract to get these values too and also see from it what each part is.

What would happen if people try to redeem their CHI for US$?

First, lets pick the best case scenario where we assume the protocol can pull all their assets from the DEX before people try to exit. Also keep in mind that i am using the current values of everything and at another time things will be different.

Here first 31.157M/2(CHI part of the USDC/CHI LP) + 5.478M(CHI of laas) that is 21M of 42.6M are owned by the protocol and we just remove them. Leaving

21.6M CHI owned by users that may want to redeem. The protocol now has the USDC part of their USDC/CHI LP left thats 31.157M/2.

that leaves 6M CHI backed by TKN, while on paper these TKN would have a value of over 37M$. As soon as one starts selling

them, they are going to collapse because basically nothing is backing them, the 2 LAAS positions would be already be

used to reduce the amount that needs to be redeemed. Only about 1M$ remains in eth/tkn that one could use to change tkn into

something of value. So basically In this scenario over 5M$ are missing.

If OTOH the protocol does not pull the LAAS pairs before and they are used by people to trade in their TKN and ZEN into CHI

then the circulating supply of CHI that needs to be redeemed could increase by that amount approaching ~10M $ of missing money.

So ATM basically between a quarter and half of the user owned value behind the chi stable coin seems to not exist anymore.

Is a collapse inevitable ?

No, you can have 100 people owning 1 shared US$ and as long as no one takes that 1 US$, everyone can live happy believing they each have 1 US$. Its also possible someone walks up to the box and adds 99$. This is crypto its not impossible someone just pulls 6M$ out of their hat and fixes this hole. But then again, given that the hole becomes bigger every day and the rate by which it grows also grows. I don’t know. I guess ill just hope that all the people are correct, who believe that sticking money into a box will allow everyone to have 66% more each year. Money can be made like that by the central bank or by government bonds or by companies selling a product. But not by wrapping US$ into a funny coin and then providing liquidity between said coin and US$. A coin that seems to have little other use than that.