Correlations in randomly initialized PCG-DXSM

In the previous blog entry I showed many bad correlations in PCG-DXSM, but the cases had always at least 2 values non random either a seed and an increment or 2 increments. And even though one could create more than a septillion of these for each seed/increment. Thats not how PCG-DXSM should be used.

So the natural question is, can this happen if PCG-DXSM is used how its supposed to, that is initialized with strongly random seed+increment.

The short awnser is, given the right circumstances, yes.

The following text is structured like this:

- Some basic and known concepts about LCGs to better illustrate their structure

- Summary of what we currently know about the correlations

- Some actual tests and software to quantify these specific correlations

- A hypothetical example showing that this can be a real issue under the right circumstances

- A workaround

- A Solution

- Some final words

- More issues

Some basic and known concepts about LCGs to better illustrate their Structure

In the algebraic structure of LCGs with identical multipliers and operations mod 2m

LCG(v0, increment) can be seen as a vector whos elements are defined by vi = vi-1 * multiplier + increment (mod 2m) we can add 2 vectors and thus LCGs. LCG(seed1, increment1) + LCG(seed2, increment2) = LCG(seed2+seed1, increment2+increment1) from this we can immediately see that the associative and kommutative law holds and that there is a inverse and identity element.

Thus LCGs with identical multipliers form an abelian group. The identity element is LCG(0,0)i = 0. For each constant vector vi = C theres a corresponding LCG(C, C*(1 – multiplier)) the increment of such “bad” LCGs is even when the multiplier is odd. It is thus common to disallow even increments. None the less even when they are disallowed in an implementation, they do not disappear from the algebraic structure. Thats because if one allows only odd numbers

the difference between 2 odd numbers is still even. If we looked at LCGs as polynomials instead of vectors then we could furthermore introduce a multiplication by x mod x2^M-1 to represent a cyclic shift of all the elements of one vector. This would allow even more powerful tools to be used. But we don’t do this currently in this text. What we now have is basically a very small set of “primitive” LCGs that can then be offseted by a constant

LCG(C,I + C*(1 – multiplier)) = LCG(0,I) + LCG(C, C*(1 – multiplier)) the number of these primitive LCGs depend on the number of factors of 2 in 1 – multiplier. for PCG-DXSM we have 2 factors of 2 but odd increments are disallowed so we have 2 such primitive vectors which is all the diversity that exists in this LCG, all other vectors are just offseted by a constant from them. Looking at this you might ask if there are 2 LCG outputs that have a 128bit constant added how do these 2129 cases fit in a 127bit “odd” increment. They do because 4 live as cyclic shifts in each vector.

Summary of what we currently know about the correlations

In the previous section we have shown that we can offset the LCG output by a constant and that all LCG outputs basically are offseted from one of 2 sequences by a constant. Or if one considered the 4 cyclic shifts too then really only half would be unique, its a matter of viewpoint.

Offsets in the low half

In DXSM the LCG output is split in 2 64bit words the low one or-ed by 1 so its always odd and the high one passed through xorshift, multiply, xorshift

before being multiplied with the low.

If we look at 2 LCGs that are offseted by D, so that only the low half is offsetted but the high half stays the same. This can be represented (in C style notation) as:

(L[i] | 1)*H[i] vs. ((L[i] + D) | 1)*H[i]

both (L[i] | 1) and ((L[i] + D) | 1) are odd as they have explicitly an 1 or-ed in, this can be written also as

(2X[i] + 1) * H[i] vs. (2Y[i] + 1) * H[i]

their difference

(2X[i] + 1)*H[i] – (2Y[i] + 1)*H[i] = (2X[i] + 1 – 2Y[i] – 1)*H[i] = 2(X[i] – Y[i])*H[i]

as can be seen their difference is even thus their lowest bit is correlated, that is not to say higher bits are not

Here we now have established that instead of 2127 uncorrelated PCG-DXSM streams there are at best 264

Collisions in the first xor

The first xor mixes the high 32bit of the high half into the low 32bit of the high half, next the high half is multiplied by the constant multiplier and the high quarter xored into the low quarter before the final multiplication with the low half of the LCG. It is important to recognize that if, after the first XOR the low 49bits of the high side of the LCG match between 2 generators then we will have identical low bits after DXSM. Consider

MSB [15bit random][17bit 0][15bit random][17bit 0][64 bit random] LSB

The first xor stage will effectively do nothing to this. The correlation after that then depends on the low 15 random bits which is the only difference there is between the two LCGs which can affect the LSB from the high half. While the low half is orred by 1 so it is bascially a constant. If this on its own doesnt already fail statistical tests. Then this can be made more likely by observing that if we misalign 2 LCGs by a multiple of 2128-b the only affected output will be in the highest b bits. So we can introduce entropy in the highest bits at will to tweak the high quarter and bring the 2 into a bad correlated state. The high quarter itself is outside the 49bits affecting the LSB output other than by the xor. It is also observed that increasing the amount of random bits beyond 15 does not fix this issue. It just requires more effort to find correlations. For example using 19bits, 65536 streams, 1024 stream alignments and 4 billion samples per stream, we still find statistical anomalies.

Collisions in the 2nd xor

It is possible to introduce a delta in the low half of the high half of the LCG, this will be unaffected by the first xor and then multiplied by the multiplier, this operation is algebraically well understood and it is possible to introduce deltas here which tend to produce poor statistics after the 2nd XOR on the low side. Such a case was also demonstrated toward teh end of my last blog entry, but no attempt was made to exploit this in the software here

Some actual tests and software to quantify these specific correlations

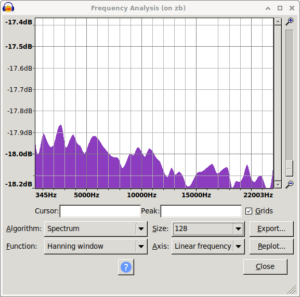

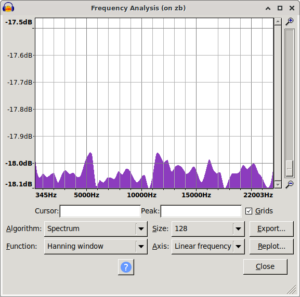

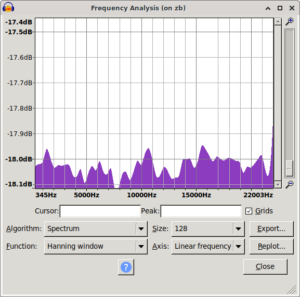

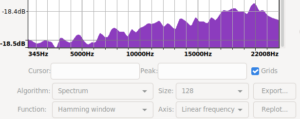

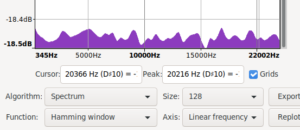

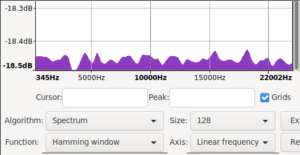

Combining the 2 cases from above with 19bits instead of 15 we can take 16k random increment,seed pairs and find a pair of vectors that are correlated if they are aligned. I cannot show correlation directly on fully random values due to myself only having my home computer for this. Also I do take 64k instead so we have over a dozend pairs to work with. At this point we align one of the seeds to the other, then shift it by a random 2128-19 multiple and check the correlation for 1024 shifts. For each shift we generate a stream of 232 values. Each tested stream will have its least significant bits interleaved or xored and the occurrence of each even 2-18 bit pattern checked based against the binomial distribution. Both the xor and interleaving sometimes catch separate cases so either alone could be used for slightly fewer cases found. Also for XOR mainly 6 and 8 bits matter while for interleaving the larger ones catch things. In the test run we see a pair that showed correlations at 15 different alignments of the 1024 tested. Given these 1024 represent a random 1/512 of the searchspace we consider. We expect ~8000 in the whole space for that pair. Other pairs had similar numbers so theres no reason to believe this was a outlier. Combining all this there seems to be a maximum of ~29 bits of diversity in the increments and about 115 bits in the seed. We can also see that many of the found correlations have multiple independent patters that are failing tests

Source code is here

Stage 1, filling set with random increments set size:65536, stream_length:4294967296, top bits:19 Stage 2, Sorting streams Stage 3, Comparing streams pairs to consider: 37 checking pair with DELTA: 0xE5B05FFFA33E1FFF ED6E8B7EA831BA19 si0: 0x4B1D7EBE754531A6 03D07FC29934A58B,0xEC1A098AE7F3EE1C F0C3F7E28050E655 si1: 0x656D1EBED20711A6 1661F443F102EB72,0xCFC64EE4226C353F 911E2DDFC6D557E9 clean table 32 18 fill table 32 18 clean table 32 16 fill table 32 16 clean table 32 14 fill table 32 14 clean table 32 12 fill table 32 12 clean table 32 10 fill table 32 10 clean table 32 8 fill table 32 8 clean table 32 6 fill table 32 6 clean table 32 4 fill table 32 4 clean table 32 2 fill table 32 2 3B8: DELTA: 0xE3DF5FFFA33E1FFF ED6E8B7EA831BA19 -6 6( 67044071)0.000000008 checking pair with DELTA: 0x2FC51FFF99D95FFF B54A758B34402CFA si0: 0x6AF7AD68373F1ECB 388F5B99D012EDC8,0x3E41C67901EE75A1 DDAC6EB90EFCDF53 si1: 0x3B328D689D65BECB 8344E60E9BD2C0CE,0xD540FAF60FD83D88 C4D70A7957646F1B checking pair with DELTA: 0x069F600086C96000 0EB818571D7DCAD0 si0: 0x75FED5AD724E4D47 09756BA7A9BB3730,0x11E1922B5FBB1E8E 4F7311398EE8460F si1: 0x6F5F75ACEB84ED46 FABD53508C3D6C60,0x9AAEFF3A5B76A3D6 3EC2E876DEA2604F checking pair with DELTA: 0xA6DBFFFFA9631FFF ECCA24CFB00F1167 si0: 0x642B9E142D73D47D 758CA1A36D0AED70,0xEA0B9F70E0FE5C84 59DF4DCDE2F4727F si1: 0xBD4F9E148410B47D 88C27CD3BCFBDC09,0x893A4A4B113A5A3C 205C596A9D7316EB checking pair with DELTA: 0xA4107FFF2C3EC000 26C54851149E853A si0: 0xE76E9BF9B19A825A 6A986886330B2129,0x1090288CC2863F81 D609776DF7909853 si1: 0x435E1BFA855BC25A 43D320351E6C9BEF,0xCD67E2DED5A42A3C 1905C3FB9FE4351B AE: DELTA: 0xEB3EBFFF2C3EC000 26C54851149E853A 12 310( 1057180)0.000000015 12 290( 1056853)0.000000211 12 f10( 1056627)0.000001252 12 1a0( 1040569)0.000001519 12 6e0( 1056277)0.000017950 12 690( 1041143)0.000113557 12 560( 1055904)0.000270395 12 da0( 1041278)0.000299401 32E: DELTA: 0x5DEEBFFF2C3EC000 26C54851149E853A 12 5c0( 1038624)0.000000000 12 1c0( 1057931)0.000000000 12 ec0( 1057333)0.000000004 12 ac0( 1040326)0.000000223 12 dc0( 1056653)0.000001023 12 6c0( 1041181)0.000149446 12 2c0( 1055845)0.000410364 12 9c0( 1041636)0.003602710 2AE: DELTA: 0x46FEBFFF2C3EC000 26C54851149E853A 12 4d0( 1060953)0.000000000 12 610( 1062490)0.000000000 12 760( 1060287)0.000000000 12 7a0( 1058928)0.000000000 12 8d0( 1063077)0.000000000 12 a10( 1062087)0.000000000 12 b60( 1062943)0.000000000 12 ba0( 1061416)0.000000000 22E: DELTA: 0x300EBFFF2C3EC000 26C54851149E853A 12 670( 1057881)0.000000000 12 740( 1039293)0.000000000 12 580( 1040335)0.000000239 -6 c( 67168834)0.000000783 12 570( 1056351)0.000010320 -6 2c( 67053163)0.000034817 12 f40( 1056076)0.000078670 12 d70( 1041141)0.000111924 checking pair with DELTA: 0x50007FFF5C726000 B744241DE30B69D1 si0: 0x6B787EBE72991EEA 8289751974A2A05F,0x0BBD42A86319A4D6 1545348A20D29269 si1: 0x1B77FEBF1626BEE9 CB4550FB9197368E,0xA41D07785110511F 469B72942EA5D15D checking pair with DELTA: 0x4AC8DFFF76E85FFF 5317B9650AE16599 si0: 0x87FB5321F119AC7A C56465440DFD6BBB,0x1BC3CB3CF5D7B874 DACCF5D5C924B5AF si1: 0x3D3273227A314C7B 724CABDF031C0622,0x07EE1FA4549F8160 7A648121E3A1BD43 checking pair with DELTA: 0x0D78BFFFF0FCC000 9F272846B576856A si0: 0x114FA1E4F4E52E77 6CF38EB5E44CC81B,0x79DA1F7A3E715F59 889363DD36B59C95 si1: 0x03D6E1E503E86E76 CDCC666F2ED642B1,0x4998AE764CF9EA36 A31FDF6D2069DB1D 2F9: DELTA: 0xF300DFFFF0FCC000 9F272846B576856A 1834a80( 17652)0.000000000 16 540( 68674)0.000000000 16 a80( 68749)0.000000000 16 1740( 68320)0.000000000 16 1a40( 68301)0.000000000 16 2740( 68147)0.000000000 16 2a40( 68350)0.000000000 16 3540( 68046)0.000000000 3F9: DELTA: 0xA420DFFFF0FCC000 9F272846B576856A 14 2000( 266373)0.000000215 16 3000( 63697)0.002678040 14 0( 258663)0.011878566 16 8000( 63794)0.041411834 14 1000( 265545)0.041734621 checking pair with DELTA: 0xBBE320004FF76000 82454C2874A21EF3 si0: 0x7BBAE3DB04A0B4DE 5D7DAF8022BF2D34,0xB6D14DEA456CF025 AF3C8E5BC500FBDD si1: 0xBFD7C3DAB4A954DD DB386357AE1D0E41,0x2E36E70296EAF807 09177110A56946B9 109: DELTA: 0xA338C0004FF76000 82454C2874A21EF3 -6 24( 67168303)0.000001275 -6 4( 67058944)0.003937258 309: DELTA: 0x0478C0004FF76000 82454C2874A21EF3 16 1000( 68200)0.000000000 16 6000( 67912)0.000000000 16 9000( 68422)0.000000000 16 a000( 68296)0.000000000 16 e000( 68322)0.000000000 14 1000( 271534)0.000000000 14 2000( 272358)0.000000000 14 0( 247561)0.000000000 189: DELTA: 0x3B88C0004FF76000 82454C2874A21EF3 14 3523( 266414)0.000000110 14 523( 266255)0.000001424 14 17a3( 266247)0.000001616 14 3e23( 266166)0.000005727 14 52f( 266127)0.000010440 -8 e8( 16806671)0.000011400 14 34a3( 266115)0.000012545 14 223( 266025)0.000048894 389: DELTA: 0x9CC8C0004FF76000 82454C2874A21EF3 14 239c( 265963)0.000122652 -8 f8( 16749417)0.000200331 14 f9c( 265926)0.000210908 14 3cac( 265900)0.000307758 -8 38( 16803599)0.002137644 -8 78( 16751532)0.006391691 14 ac( 258625)0.007053808 14 1c6c( 265614)0.016667751 checking pair with DELTA: 0x61F81FFFD0A1A000 0EDB22A63E0AD996 si0: 0x1429D0EE8B94E764 E8B02AFD0694AD82,0x17D8D7C4708639FC F80DBC31B69114A5 si1: 0xB231B0EEBAF34764 D9D50856C889D3EC,0x79A6C972B0378F58 9787503C7F7BA21D 12C: DELTA: 0x41639FFFD0A1A000 0EDB22A63E0AD996 14 82c( 267011)0.000000000 14 1bdc( 257159)0.000000000 14 282c( 257273)0.000000000 14 275c( 257468)0.000000000 14 342c( 266806)0.000000000 14 182c( 257848)0.000000050 14 27dc( 257918)0.000000159 14 75c( 266276)0.000001021 2C: DELTA: 0xBCC39FFFD0A1A000 0EDB22A63E0AD996 14 65c( 257898)0.000000114 14 19ac( 266391)0.000000161 14 15ac( 266117)0.000012167 14 29ac( 265981)0.000094048 14 332c( 258649)0.009809622 14 365c( 258698)0.019104299 14 3a5c( 258709)0.022160153 2EC: DELTA: 0x697B9FFFD0A1A000 0EDB22A63E0AD996 14 202b( 265734)0.003239245 32C: DELTA: 0x4AA39FFFD0A1A000 0EDB22A63E0AD996 14 20ac( 266407)0.000000124 14 2fec( 258349)0.000135828 14 3e6c( 258354)0.000146280 14 10dc( 258396)0.000271643 14 30dc( 265864)0.000517196 14 2f2c( 258539)0.002125153 14 aec( 265729)0.003471746 14 3fec( 265693)0.005703141 2AC: DELTA: 0x88539FFFD0A1A000 0EDB22A63E0AD996 16 2c00( 67872)0.000000001 16 ec00( 67853)0.000000001 16 9c00( 63245)0.000000001 16 ac00( 63262)0.000000002 16 6c00( 63316)0.000000014 16 5c00( 63339)0.000000031 16 1c00( 67614)0.000003283 16 dc00( 67613)0.000003389 22C: DELTA: 0xC6039FFFD0A1A000 0EDB22A63E0AD996 14 56c( 266846)0.000000000 14 3ec( 266323)0.000000482 14 96c( 266299)0.000000708 14 105c( 258276)0.000045522 14 13dc( 258308)0.000073692 14 3a9c( 265995)0.000076435 14 c1c( 265955)0.000137963 14 a9c( 265801)0.001268171 checking pair with DELTA: 0xAEE04000493CBFFF BD570161F1BB9245 si0: 0x3232CB36E1EE4D2A A6E5C02AEC015417,0x5D4AADB8CE05CB16 60BFF633BE1922B7 si1: 0x83528B3698B18D2A E98EBEC8FA45C1D2,0x7023209E9838B9CB EDC7AD26E9D4B33B FA: DELTA: 0x91C80000493CBFFF BD570161F1BB9245 16 0( 67945)0.000000000 14 1270( 267234)0.000000000 12 80( 1061964)0.000000000 12 1b0( 1059972)0.000000000 12 270( 1062824)0.000000000 12 34c( 1059060)0.000000000 12 40f( 1058356)0.000000000 12 540( 1062857)0.000000000 checking pair with DELTA: 0xF1602000180CDFFF DDB3EEC70873FC23 si0: 0xCD641FA73D4FFD4D C1BFB916CB540340,0xD46851900454DC91 BE71D8204442D5E5 si1: 0xDC03FFA725431D4D E40BCA4FC2E0071D,0xDD28B58BCA390DD0 200B20AFEAB32681 149: DELTA: 0x46ACC000180CDFFF DDB3EEC70873FC23 -8 40( 16748835)0.000073773 -8 48( 16804298)0.000682072 14 161c( 265780)0.001704525 12 e5c( 1041888)0.019305183 49: DELTA: 0xBF0CC000180CDFFF DDB3EEC70873FC23 12 1ac( 1058326)0.000000000 12 dac( 1059512)0.000000000 -6 0( 67180422)0.000000000 -6 20( 67037873)0.000000000 12 5ac( 1039647)0.000000001 12 e5c( 1056936)0.000000108 12 9ac( 1040522)0.000001052 12 25c( 1056402)0.000007026 249: DELTA: 0xCE4CC000180CDFFF DDB3EEC70873FC23 12 16c( 1039873)0.000000005 12 69c( 1056832)0.000000250 12 920( 1040498)0.000000872 12 750( 1040800)0.000008950 12 56c( 1055931)0.000223162 12 f50( 1055478)0.005111395 12 e9c( 1041846)0.014654417 12 350( 1055194)0.033027892 C9: DELTA: 0x82DCC000180CDFFF DDB3EEC70873FC23 16 0( 67311)0.024882147 209: DELTA: 0xEC64C000180CDFFF DDB3EEC70873FC23 14 edb( 258766)0.047457856 3C9: DELTA: 0x19BCC000180CDFFF DDB3EEC70873FC23 12 e7c( 1059061)0.000000000 12 a7c( 1039130)0.000000000 12 98c( 1039273)0.000000000 12 58c( 1039733)0.000000002 12 880( 1057062)0.000000039 12 c80( 1040191)0.000000075 12 27c( 1056979)0.000000077 12 18c( 1056842)0.000000230 1C9: DELTA: 0x0A7CC000180CDFFF DDB3EEC70873FC23 12 b0( 1058704)0.000000000 12 870( 1058321)0.000000000 12 cb0( 1058044)0.000000000 12 70( 1039197)0.000000000 12 380( 1057886)0.000000000 12 3b0( 1039348)0.000000000 12 470( 1057815)0.000000000 12 770( 1039485)0.000000000 checking pair with DELTA: 0x4BAEDFFF3117BFFF 2C6645F93365ECEA si0: 0xE40A9D2B0465523F 42BC3B3B97D36E8D,0xBB93AB3A34C78A9A 64593536E5C52775 si1: 0x985BBD2BD34D9240 1655F542646D81A3,0x3AB79415CB425F3D 57648C6C00E22BFD 194: DELTA: 0x51A95FFF3117BFFF 2C6645F93365ECEA 12 d39( 1041947)0.028354029 checking pair with DELTA: 0x98E3E00009FCA000 0A0A41967884F5D6 si0: 0xDF60BAED6D489376 8C513233A6ED5E42,0x3955813EEBEECC4A 641A81300E22E3A5 si1: 0x467CDAED634BF376 8246F09D2E68686C,0x5ACFED56DDFFBB1B A8D2EF5151DF4E1D FF: DELTA: 0xBD3F400009FCA000 0A0A41967884F5D6 12 507( 1041478)0.001219788 11F: DELTA: 0xC5D3400009FCA000 0A0A41967884F5D6 12 5fe( 1041919)0.023635555 31F: DELTA: 0x4F13400009FCA000 0A0A41967884F5D6 -6 33( 67163521)0.000085788 9F: DELTA: 0xA383400009FCA000 0A0A41967884F5D6 12 eee( 1041253)0.000250523 12 d1e( 1055609)0.002107609 12 dee( 1041717)0.006220077 37F: DELTA: 0x68CF400009FCA000 0A0A41967884F5D6 12 db( 1058017)0.000000000 12 e7( 1058942)0.000000000 12 7db( 1059479)0.000000000 12 827( 1059615)0.000000000 12 ce7( 1058144)0.000000000 12 f27( 1058944)0.000000000 -8 66( 16826582)0.000000000 -8 e6( 16822338)0.000000000 27F: DELTA: 0x242F400009FCA000 0A0A41967884F5D6 14 34a7( 267131)0.000000000 12 5b( 1064451)0.000000000 12 bb( 1060051)0.000000000 12 137( 1064641)0.000000000 12 14b( 1059721)0.000000000 12 1cb( 1063913)0.000000000 12 227( 1058434)0.000000000 12 237( 1062931)0.000000000 7F: DELTA: 0x9AEF400009FCA000 0A0A41967884F5D6 -6 12( 67240251)0.000000000 -6 32( 66978036)0.000000000 -8 52( 16812266)0.000000000 -8 b2( 16742497)0.000000000 12 997( 1057305)0.000000005 -8 72( 16743940)0.000000008 -8 12( 16809826)0.000000030 12 d6b( 1040123)0.000000043 29F: DELTA: 0x2CC3400009FCA000 0A0A41967884F5D6 12 61e( 1041497)0.001391186 12 729( 1055557)0.003001539 12 91e( 1041758)0.008181266 12 f29( 1041977)0.034431422 2FF: DELTA: 0x467F400009FCA000 0A0A41967884F5D6 -6 a( 67162084)0.000284374 12 c17( 1041969)0.032696417 3F: DELTA: 0x89C7400009FCA000 0A0A41967884F5D6 -6 10( 67060938)0.017930280 21F: DELTA: 0x0A73400009FCA000 0A0A41967884F5D6 12 4c1( 1055397)0.008769471 1FF: DELTA: 0x01DF400009FCA000 0A0A41967884F5D6 -6 12( 67158377)0.005435176 12 ceb( 1055462)0.005689278 5F: DELTA: 0x925B400009FCA000 0A0A41967884F5D6 12 8c6( 1055190)0.033889247 3FF: DELTA: 0x8B1F400009FCA000 0A0A41967884F5D6 -6 6( 67166263)0.000008006 -6 26( 67061803)0.033983055 17F: DELTA: 0xDF8F400009FCA000 0A0A41967884F5D6 12 17( 1059398)0.000000000 12 c7( 1058635)0.000000000 12 f7( 1058936)0.000000000 12 15b( 1059633)0.000000000 12 1a7( 1061491)0.000000000 12 25b( 1061656)0.000000000 12 2a7( 1061160)0.000000000 12 307( 1058587)0.000000000 checking pair with DELTA: 0x9C385FFFD517C000 306C5C1B4D5AB7C6 si0: 0x3FEAE99F258B5DE7 09F745C8569D55EC,0x7F3878FC16BE1A2D 2DD9E922BA431F8B si1: 0xA3B2899F50739DE6 D98AE9AD09429E26,0xAAC4D1CD87D69C2E 70FEC2F54B2666C3 2D6: DELTA: 0x2D609FFFD517C000 306C5C1B4D5AB7C6 14 3eb0( 266018)0.000054283 checking pair with DELTA: 0x886D80002EBC1FFF EBA31452485D63B9 si0: 0xBF85BAB7F7A88915 DD24DFCE63656BDD,0x1D5F2A9E89966A03 3142879BE63649DD si1: 0x37183AB7C8EC6915 F181CB7C1B080824,0xC92276BC926F7002 47E39578BCDCFFF1 checking pair with DELTA: 0x3043000012E7A000 4F55B89E15612A9D si0: 0xB52CE7856DE4DCAE 576D37344D0DE947,0x9055C7B98826164F 4FAAEB6EB2A3DC9F si1: 0x84E9E7855AFD3CAE 08177E9637ACBEAA,0xA6DE91805DB5FD1D B80EAD3EBF24CB03 checking pair with DELTA: 0xDB780000301CDFFF EFC16152F51E792F si0: 0x63684BE2D0185347 9CCA1B957F53D05A,0x80FA168A7F074369 F0A5BC2519C96861 si1: 0x87F04BE29FFB7347 AD08BA428A35572B,0x5B97EE447686113E FB2C8C166671C56D 8B: DELTA: 0x37BD6000301CDFFF EFC16152F51E792F 14 3f77( 257778)0.000000015 14 1f77( 266226)0.000002249 -8 f6( 16747303)0.000004836 14 8b( 258467)0.000761714 14 e4b( 258533)0.001952468 14 208b( 265599)0.020378990 -8 b6( 16801849)0.032928680 14 1e4b( 265538)0.045762519 1CB: DELTA: 0x1AE56000301CDFFF EFC16152F51E792F -8 90( 16809736)0.000000035 -8 d0( 16749917)0.000465123 -8 10( 16803470)0.002631082 28B: DELTA: 0x6FFD6000301CDFFF EFC16152F51E792F -8 3a( 16812939)0.000000000 -8 fa( 16744839)0.000000045 -8 7a( 16749044)0.000105850 -8 92( 16749408)0.000197290 -8 12( 16750130)0.000662933 -8 ba( 16802080)0.023187546 -8 52( 16802065)0.023723934 3CB: DELTA: 0x53256000301CDFFF EFC16152F51E792F 14 1ec( 266870)0.000000000 14 bec( 267106)0.000000000 14 e1c( 267279)0.000000000 14 149c( 266995)0.000000000 14 18dc( 267103)0.000000000 14 249c( 267132)0.000000000 14 3e1c( 267193)0.000000000 14 49c( 257280)0.000000000 38B: DELTA: 0x8C1D6000301CDFFF EFC16152F51E792F 14 3f07( 265916)0.000243974 14 1c0b( 265914)0.000251174 14 c0b( 258412)0.000343271 14 38b7( 265749)0.002629606 14 20fb( 258612)0.005894475 14 23f7( 265565)0.032043522 14 30fb( 265540)0.044574402 18B: DELTA: 0x53DD6000301CDFFF EFC16152F51E792F 14 3c67( 266192)0.000003825 14 39b( 266165)0.000005816 -8 d2( 16747988)0.000016635 14 2f9b( 266035)0.000042097 14 3067( 258273)0.000043503 14 2c67( 258349)0.000135828 14 7d7( 265938)0.000177004 -8 92( 16804377)0.000598385 24B: DELTA: 0xA8F56000301CDFFF EFC16152F51E792F 14 1480( 265808)0.001148715 checking pair with DELTA: 0xAC575FFFEABDA000 4916E783B7945A0F si0: 0xEA55F2E4610D825A C9C89FD187E1A389,0xA809E3872B037628 4612D5C116F50F8F si1: 0x3DFE92E4764FE25A 80B1B84DD04D497A,0x88A56FF680022BCF 6C25989E452C8A1B B: DELTA: 0x363E7FFFEABDA000 4916E783B7945A0F -6 12( 67172158)0.000000033 real 519m55,784s user 13633m42,794s sys 8m12,787s

Stage 1, filling set with random increments set size:16384, stream_length:4294967296, top bits:19 Stage 2, Sorting streams Stage 3, Comparing streams pairs to consider: 5 checking pair with DELTA: 0xBF6CFFFFE20A6000 1237BFB750ABC5D4 si0: 0x7B36DD275880DB17 CADB2D976848C718,0xF82B95907E57998B 00CC0B074806B4AB si1: 0xBBC9DD2776767B17 B8A36DE0179D0144,0x9B02AF6C89EFC1D7 EA92FCC9A4D2ADBB clean table 32 18 fill table 32 18 to 4220518 clean table 32 16 fill table 32 16 to 4299161 clean table 32 14 fill table 32 14 to 4613734 clean table 32 12 fill table 32 12 to 5872025 clean table 32 10 fill table 32 10 to 10905190 clean table 32 8 fill table 32 8 to 31037849 clean table 32 6 fill table 32 6 to 111568486 clean table 32 4 fill table 32 4 to 433691033 clean table 32 2 fill table 32 2 to 1722181222 10E: DELTA: 0x3D063FFFE20A6000 1237BFB750ABC5D4 12 230( 1058325)0.000000000 12 530( 1057565)0.000000000 12 1f0( 1057502)0.000000000 12 130( 1040174)0.000000009 12 2f0( 1040192)0.000000010 12 930( 1056696)0.000000099 12 af0( 1056646)0.000000146 12 a30( 1040910)0.000002765 checking pair with DELTA: 0x80B2C00052319FFF 85F7EBD9A5CACFEE si0: 0xFE11F43F71412700 F6B7489194A2D4B7,0xE2AF29ED1B20D157 01C834D59668D06F si1: 0x7D5F343F1F0F8701 70BF5CB7EED804C9,0xD8493089E0552D49 D998F77D58F2D3C7 1F4: DELTA: 0x6FCE400052319FFF 85F7EBD9A5CACFEE -6 c( 67043292)0.000000000 -6 2c( 67161936)0.000043402 3F4: DELTA: 0x608E400052319FFF 85F7EBD9A5CACFEE 12 70( 1042089)0.009535608 12 f70( 1055012)0.014186071 12 3b0( 1042190)0.018029377 2F4: DELTA: 0xE82E400052319FFF 85F7EBD9A5CACFEE -6 4( 67198002)0.000000000 -6 24( 67032108)0.000000000 12 ec0( 1040797)0.000001182 -8 44( 16801938)0.003888811 -8 c4( 16801805)0.004755570 12 d0c( 1041987)0.004963123 12 6c0( 1054956)0.020123604 12 ac0( 1054907)0.027260483 F4: DELTA: 0xF76E400052319FFF 85F7EBD9A5CACFEE 12 800( 1056020)0.000015939 checking pair with DELTA: 0xB1E3C0007875E000 2C73576CA4DE67DE si0: 0xCB1F2D8DA9A5ACB8 079DA5BC62AB8EF8,0xF136A63903CFB057 99427D8A8F043A5D si1: 0x193B6D8D312FCCB7 DB2A4E4FBDCD271A,0x009C79F3B34D2CD3 22B43E5851BF9275 1B4: DELTA: 0x6B7C40007875E000 2C73576CA4DE67DE 14 2b00( 257580)0.000000000 14 1b00( 258140)0.000000760 14 3b00( 265893)0.000046023 14 1700( 258412)0.000046388 14 b00( 265785)0.000214713 14 700( 265705)0.000653519 14 3700( 265657)0.001259998 14 2700( 258731)0.004023711 checking pair with DELTA: 0x45728000B24DC000 1AFDFE5D4232E832 si0: 0x3456181E0244AE3D 9CFEF32202AB3CE3,0xC8CB61ECFAF163DF C41D4E73484C4E1D si1: 0xEEE3981D4FF6EE3D 8200F4C4C07854B1,0xDD8334AD194ECC83 F2A9D06A4F12C145 46: DELTA: 0x07CF4000B24DC000 1AFDFE5D4232E832 -6 c( 67065092)0.047214990 146: DELTA: 0xD5EF4000B24DC000 1AFDFE5D4232E832 14 700( 267561)0.000000000 14 b00( 267517)0.000000000 14 3700( 268055)0.000000000 14 3b00( 267320)0.000000000 12 700( 1065199)0.000000000 12 b00( 1065864)0.000000000 12 f00( 1031945)0.000000000 12 300( 1035611)0.000000000 346: DELTA: 0x722F4000B24DC000 1AFDFE5D4232E832 -6 4( 67216858)0.000000000 -6 24( 67010410)0.000000000 12 1c0( 1038151)0.000000000 12 2c0( 1038787)0.000000000 12 6c0( 1057954)0.000000000 12 5c0( 1057500)0.000000000 12 9c0( 1057053)0.000000006 12 ec0( 1040158)0.000000008 real 118m52,649s user 2853m41,628s sys 1m57,080s

A hypothetical example showing that this can be a real issue under the right/wrong circumstances

For research (in physics for example) 5 sigma is a requirement and that is for the experiment not for the PRNG so it would be unreasonable if the PRNG would consume more than 1% of that. If we start from where we left of, we have 115 bits in the seed to begin with, minus 32 as we will consume 2³² from each stream and minus 30 for the ~1% at 5 sigma. That leaves us with 53 bits in the seed, we now add 29 bits from the increment leaving us with 82 bits. These 82 bit would be expected to be affected by a collision if more than half are used (like in a birthday attack). That leaves us with being able to consume 241 streams assuming they are perfectly randomly initialized. This compares to an ideal 2×128 bit seeded PRNG where we could consume 297 with the same 5 sigma and 232 read constraints. How long would a supercomputer need to hit a correlated pair ? If we assume PCG-DXSM needs 10 CPU operations per output and we read 232 per stream as in our code and a 1000 0000 Terra(fl)op/sec supercomputer is used. It would take about a day. 241 * 232 * 10 / ( 1000 0000 * 10004 sec-1 * 3600 sec/h * 24 h/day) = 1.09 … days The reader is here also reminded that we have not attempted to exploit the 2nd XOR in DXSM. Nor that the statistical tests used are very sophisticated. And that we are reading here 232 values for each stream. A system favoring reading more on seemingly correlated sequences and less on others would need less data to run into problems

A workaround

The obvious one, is not to use PCG-DXSM with (increment based) streams. But if continued use of PCG-DXSM with streams is a goal, and if we assume the described issue here represents all the correlated streams (which certainly is not true as we ignored the 2nd XOR and likely have not found all correlation cases beyond that either and also only considered reasonable aligned streams, theres also other little details here and there I knowingly did not pay adequate attention to). Then one can construct increments instead of randomly in a systematic way so they always differ in bits that have been required to be identical in a collision. That can be achieved easily by using a counter fed through some bijective function for some extra pseudo randomness. The remaining bits could then still be set somewhat randomly. With that and 21 bits instead of 19 bits as in the attack removed (I have also found a collision with 2x21bits randomized) we have 127 – 63 – 2×21 = 22 bits left and thus ~4 million increments, given there are 2 odd values modulo 4 there are ~8 million non correlated streams of full 2128 period (if we limit our self to what the implementation here can find) thus 2128+23. To clarify the differing bits we talk about are in the delta between aligned LCG outputs and not the increment values directly.

A solution

To solve this, first we need to understand why PCG has repeatedly been plagued by stream correlation issues, both the PCG “1.0” and now PCG 2.0 (DXSM). The reason appears to be because when using the increment and the seed and setting them randomly one inevitably challenges the mixer after the LCG with every constant difference. That is fundamentally very similar to feeding a mixer with a counter instead of a LCG. But the mixers seem designed to require more randomness than a counter provides.

The solution to this seems to be to give up on one of the assumptions leading to this, and these are 1. LCG, 2. random use of the increment, 3. a “simple” mixer.

Now if the simple mixer is replaced by a complex one than the LCG can be replaced by a counter more like in XSM64 because if the mixer is strong enough to work with a counter as input then the extra multiplication of the LCG no longer is useful and just slows it down.

I also do believe the solution is to spend more time analyzing the existing PRNGs instead of creating new ones. There are PRNGs that are fast and that have no known issues and seem to have received limited attention in terms of analyzing them. IMHO a new generator should only be created when its more optimal in some way than all other existing generators.

Final words

Iam curious if XSM64 has such issues too. From a quick glance it seemed designed with this sort of “correlation from offset” problem in mind, or at least i was too dumb to immediately produce a correlation the same way it works here. Sadly i did not have enough time to really investigate XSM64 so thats not meant as a recommendation, just a statement of myself being curious about …

I hope this whole blog entry is helpful to someone

More issues

After writing above, it came to my mind that as i already have the code to test for correlations between streams, it would be very easy to test this within the same period / LCG / stream / increment. After all we see correlations between streams that are misaligned by multiplies of 2109 with different increments. So what about within teh same stream/increment? Testing streams of 226 length and misaligned by all the 65536 possibilities of the top 16 bits of the 128 bit. The 3 pairs tested found 13409, 15950 and 15360 correlations. I also tried a random subset of the top 32bit instead of 16 but these found no additional correlations with my quick tests. Retesting this with practrand to be more sure my tests are not all faulty. And practrand seems better in detecting these.

STATET inc1 = 12345;

STATET inc2 = inc1;

STATET state1 = 31415;

STATET state2 = step(state1, inc1, ((STATET)1)<<109);

int64_t stream_length = 1LL<<60;

for(int64_t i = 0; i< stream_length; i++) {

OUTT v1, v2;

v1 = pcg_dxsm(&state1, multiplier, inc1);

v2 = pcg_dxsm(&state2, multiplier, inc2);

v1 ^= v2;

fwrite(&v1, 8, 1, stdout);

}

step 1<<109 RNG_test using PractRand version 0.95 RNG = RNG_stdin64, seed = unknown test set = core, folding = standard (64 bit) rng=RNG_stdin64, seed=unknown length= 128 megabytes (2^27 bytes), time= 3.6 seconds no anomalies in 196 test result(s) rng=RNG_stdin64, seed=unknown length= 256 megabytes (2^28 bytes), time= 8.4 seconds no anomalies in 213 test result(s) rng=RNG_stdin64, seed=unknown length= 512 megabytes (2^29 bytes), time= 16.3 seconds no anomalies in 229 test result(s) rng=RNG_stdin64, seed=unknown length= 1 gigabyte (2^30 bytes), time= 29.9 seconds no anomalies in 246 test result(s) rng=RNG_stdin64, seed=unknown length= 2 gigabytes (2^31 bytes), time= 52.3 seconds Test Name Raw Processed Evaluation FPF-14+6/16:cross R= +4.9 p = 1.8e-4 unusual ...and 262 test result(s) without anomalies rng=RNG_stdin64, seed=unknown length= 4 gigabytes (2^32 bytes), time= 94.4 seconds no anomalies in 279 test result(s) rng=RNG_stdin64, seed=unknown length= 8 gigabytes (2^33 bytes), time= 179 seconds no anomalies in 295 test result(s) rng=RNG_stdin64, seed=unknown length= 16 gigabytes (2^34 bytes), time= 342 seconds no anomalies in 311 test result(s) rng=RNG_stdin64, seed=unknown length= 32 gigabytes (2^35 bytes), time= 663 seconds Test Name Raw Processed Evaluation [Low16/64]FPF-14+6/16:all R= -5.9 p =1-2.3e-5 mildly suspicious ...and 324 test result(s) without anomalies rng=RNG_stdin64, seed=unknown length= 64 gigabytes (2^36 bytes), time= 1298 seconds Test Name Raw Processed Evaluation FPF-14+6/16:(15,14-3) R= +8.7 p = 2.3e-7 mildly suspicious FPF-14+6/16:all R= +5.6 p = 1.0e-4 unusual TMFn(2+6):wl R= +25.9 p~= 1e-8 very suspicious [Low16/64]TMFn(2+4):wl R= +28.2 p~= 7e-10 very suspicious [Low4/64]TMFn(2+2):wl R= +25.1 p~= 3e-8 suspicious ...and 335 test result(s) without anomalies rng=RNG_stdin64, seed=unknown length= 128 gigabytes (2^37 bytes), time= 2268 seconds Test Name Raw Processed Evaluation FPF-14+6/16:(15,14-2) R= +15.2 p = 4.3e-13 VERY SUSPICIOUS FPF-14+6/16:all R= +10.3 p = 3.9e-9 VERY SUSPICIOUS TMFn(2+6):wl R= +58.1 p~= 7e-28 FAIL !! TMFn(2+7):wl R= +35.3 p~= 3e-14 FAIL TMFn(2+8):wl R= +29.8 p~= 7e-11 VERY SUSPICIOUS [Low16/64]FPF-14+6/16:cross R= +5.8 p = 3.2e-5 mildly suspicious [Low16/64]TMFn(2+4):wl R= +58.1 p~= 6e-28 FAIL !! [Low16/64]TMFn(2+5):wl R= +36.2 p~= 1e-14 FAIL [Low16/64]TMFn(2+6):wl R= +31.1 p~= 1e-11 VERY SUSPICIOUS [Low16/64]TMFn(2+7):wl R= +19.3 p~= 1e-5 unusual [Low4/64]TMFn(2+2):wl R= +59.6 p~= 9e-29 FAIL !! [Low4/64]TMFn(2+3):wl R= +34.9 p~= 6e-14 FAIL [Low4/64]TMFn(2+4):wl R= +31.0 p~= 1e-11 VERY SUSPICIOUS ...and 342 test result(s) without anomalies step 1<<110 RNG_test using PractRand version 0.95 RNG = RNG_stdin64, seed = unknown test set = core, folding = standard (64 bit) rng=RNG_stdin64, seed=unknown length= 128 megabytes (2^27 bytes), time= 2.4 seconds no anomalies in 196 test result(s) rng=RNG_stdin64, seed=unknown length= 256 megabytes (2^28 bytes), time= 6.5 seconds no anomalies in 213 test result(s) rng=RNG_stdin64, seed=unknown length= 512 megabytes (2^29 bytes), time= 14.6 seconds no anomalies in 229 test result(s) rng=RNG_stdin64, seed=unknown length= 1 gigabyte (2^30 bytes), time= 26.1 seconds no anomalies in 246 test result(s) rng=RNG_stdin64, seed=unknown length= 2 gigabytes (2^31 bytes), time= 49.2 seconds no anomalies in 263 test result(s) rng=RNG_stdin64, seed=unknown length= 4 gigabytes (2^32 bytes), time= 94.8 seconds no anomalies in 279 test result(s) rng=RNG_stdin64, seed=unknown length= 8 gigabytes (2^33 bytes), time= 180 seconds Test Name Raw Processed Evaluation FPF-14+6/16:(16,14-6) R= +7.8 p = 5.1e-6 unusual ...and 294 test result(s) without anomalies rng=RNG_stdin64, seed=unknown length= 16 gigabytes (2^34 bytes), time= 325 seconds Test Name Raw Processed Evaluation FPF-14+6/16:(16,14-5) R= +12.5 p = 3.4e-10 very suspicious [Low16/64]FPF-14+6/16:all R= +4.9 p = 4.5e-4 unusual ...and 309 test result(s) without anomalies rng=RNG_stdin64, seed=unknown length= 32 gigabytes (2^35 bytes), time= 599 seconds Test Name Raw Processed Evaluation FPF-14+6/16:(16,14-5) R= +25.2 p = 8.7e-21 FAIL ! ...and 324 test result(s) without anomalies step 1<<111 RNG_test using PractRand version 0.95 RNG = RNG_stdin64, seed = unknown test set = core, folding = standard (64 bit) rng=RNG_stdin64, seed=unknown length= 128 megabytes (2^27 bytes), time= 2.6 seconds no anomalies in 196 test result(s) rng=RNG_stdin64, seed=unknown length= 256 megabytes (2^28 bytes), time= 7.7 seconds no anomalies in 213 test result(s) rng=RNG_stdin64, seed=unknown length= 512 megabytes (2^29 bytes), time= 15.1 seconds no anomalies in 229 test result(s) rng=RNG_stdin64, seed=unknown length= 1 gigabyte (2^30 bytes), time= 28.4 seconds no anomalies in 246 test result(s) rng=RNG_stdin64, seed=unknown length= 2 gigabytes (2^31 bytes), time= 52.3 seconds no anomalies in 263 test result(s) rng=RNG_stdin64, seed=unknown length= 4 gigabytes (2^32 bytes), time= 92.2 seconds no anomalies in 279 test result(s) rng=RNG_stdin64, seed=unknown length= 8 gigabytes (2^33 bytes), time= 172 seconds Test Name Raw Processed Evaluation FPF-14+6/16:(17,14-7) R= +9.1 p = 4.5e-7 mildly suspicious [Low16/64]FPF-14+6/16:cross R= +7.8 p = 6.4e-7 suspicious ...and 293 test result(s) without anomalies rng=RNG_stdin64, seed=unknown length= 16 gigabytes (2^34 bytes), time= 337 seconds Test Name Raw Processed Evaluation FPF-14+6/16:(17,14-6) R= +16.4 p = 1.4e-12 VERY SUSPICIOUS FPF-14+6/16:(20,14-8) R= +16.1 p = 1.1e-11 VERY SUSPICIOUS FPF-14+6/16:cross R= +6.5 p = 8.5e-6 mildly suspicious [Low16/64]FPF-14+6/16:cross R= +18.2 p = 1.2e-15 FAIL ! ...and 307 test result(s) without anomalies step 1<<112 RNG_test using PractRand version 0.95 RNG = RNG_stdin64, seed = unknown test set = core, folding = standard (64 bit) rng=RNG_stdin64, seed=unknown length= 128 megabytes (2^27 bytes), time= 2.8 seconds Test Name Raw Processed Evaluation FPF-14+6/16:cross R= +7.9 p = 5.5e-7 suspicious ...and 195 test result(s) without anomalies rng=RNG_stdin64, seed=unknown length= 256 megabytes (2^28 bytes), time= 7.9 seconds Test Name Raw Processed Evaluation FPF-14+6/16:cross R= +16.0 p = 7.3e-14 FAIL ...and 212 test result(s) without anomalies step 1<<115 RNG_test using PractRand version 0.95 RNG = RNG_stdin64, seed = unknown test set = core, folding = standard (64 bit) rng=RNG_stdin64, seed=unknown length= 128 megabytes (2^27 bytes), time= 2.9 seconds Test Name Raw Processed Evaluation FPF-14+6/16:cross R= +67.0 p = 9.4e-57 FAIL !!!! TMFn(2+0):wl R= +23.5 p~= 1e-7 suspicious [Low16/64]FPF-14+6/16:(4,14-3) R= +13.9 p = 6.3e-12 VERY SUSPICIOUS [Low16/64]FPF-14+6/16:(5,14-4) R= +8.1 p = 1.4e-6 unusual [Low16/64]FPF-14+6/16:all R= +6.4 p = 1.5e-5 mildly suspicious [Low16/64]FPF-14+6/16:cross R= +6.5 p = 1.3e-5 mildly suspicious ...and 190 test result(s) without anomalies step 1<<120 RNG_test using PractRand version 0.95 RNG = RNG_stdin64, seed = unknown test set = core, folding = standard (64 bit) rng=RNG_stdin64, seed=unknown length= 128 megabytes (2^27 bytes), time= 2.6 seconds Test Name Raw Processed Evaluation BCFN(2+13,13-9,T) R= +26.0 p = 5.7e-7 mildly suspicious Gap-16:A R= +6.9 p = 2.5e-5 mildly suspicious FPF-14+6/16:(0,14-0) R= +7.5 p = 1.6e-6 unusual FPF-14+6/16:(1,14-0) R= +18.0 p = 2.8e-16 FAIL FPF-14+6/16:(2,14-0) R= +16.0 p = 1.8e-14 FAIL FPF-14+6/16:(3,14-1) R= +18.7 p = 2.4e-16 FAIL FPF-14+6/16:(4,14-2) R= +7.5 p = 2.2e-6 unusual FPF-14+6/16:(5,14-2) R= +10.7 p = 3.7e-9 very suspicious FPF-14+6/16:(6,14-3) R= +10.7 p = 3.8e-9 very suspicious FPF-14+6/16:(7,14-4) R= +25.3 p = 1.4e-20 FAIL ! FPF-14+6/16:all R= +94.7 p = 2.4e-88 FAIL !!!!! FPF-14+6/16:cross R= +2465 p = 3e-2074 FAIL !!!!!!!! TMFn(2+0):wl R=+968.3 p~= 6e-576 FAIL !!!!!!! TMFn(2+1):wl R=+487.2 p~= 2e-286 FAIL !!!!!! TMFn(2+2):wl R=+232.7 p~= 5e-133 FAIL !!!!! [Low16/64]BCFN(2+1,13-4,T) R= +10.9 p = 1.0e-4 unusual step 1<<127 RNG_test using PractRand version 0.95 RNG = RNG_stdin64, seed = unknown test set = core, folding = standard (64 bit) rng=RNG_stdin64, seed=unknown length= 128 megabytes (2^27 bytes), time= 2.5 seconds Test Name Raw Processed Evaluation BCFN(2+0,13-3,T) R=+24986 p = 0 FAIL !!!!!!!! BCFN(2+1,13-3,T) R=+24275 p = 0 FAIL !!!!!!!! BCFN(2+2,13-3,T) R=+24133 p = 0 FAIL !!!!!!!! BCFN(2+3,13-3,T) R=+23873 p = 0 FAIL !!!!!!!! BCFN(2+4,13-4,T) R=+30456 p = 0 FAIL !!!!!!!! BCFN(2+5,13-5,T) R=+37691 p = 0 FAIL !!!!!!!! BCFN(2+6,13-5,T) R=+36654 p = 0 FAIL !!!!!!!! BCFN(2+7,13-6,T) R=+43148 p = 0 FAIL !!!!!!!! BCFN(2+8,13-6,T) R=+39020 p = 0 FAIL !!!!!!!! BCFN(2+9,13-7,T) R=+39135 p = 0 FAIL !!!!!!!! BCFN(2+10,13-8,T) R=+33354 p = 8e-8466 FAIL !!!!!!!! BCFN(2+11,13-8,T) R=+19688 p = 1e-4997 FAIL !!!!!!!! BCFN(2+12,13-9,T) R=+11650 p = 7e-2619 FAIL !!!!!!!! BCFN(2+13,13-9,T) R= +5830 p = 3e-1311 FAIL !!!!!!!! DC6-9x1Bytes-1 R= +2564 p = 1e-1574 FAIL !!!!!!!! Gap-16:A R=+362.0 p = 3.5e-308 FAIL !!!!!! Gap-16:B R=+576.2 p = 2.4e-461 FAIL !!!!!!! FPF-14+6/16:(2,14-0) R=+663.1 p = 2.7e-613 FAIL !!!!!!! ...